Introducing WSE-3: World’s Largest Chip Powers AI Supercomputer

The game-changing WSE-3, the world’s largest computer chip, is driving a new era of AI supercomputing with incredible speeds, eight times faster than its predecessors.

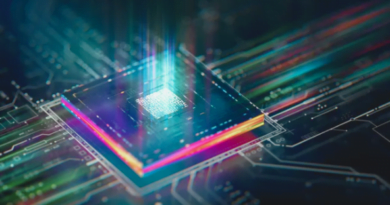

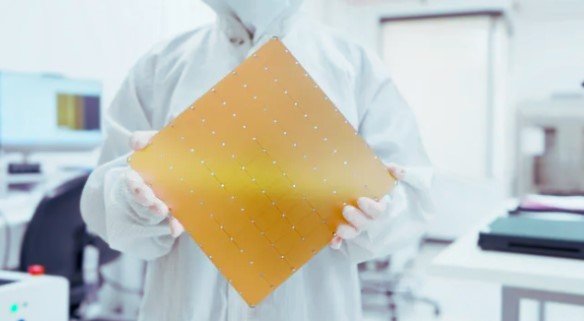

Cerebras unveils the groundbreaking Wafer Scale Engine 3 (WSE-3) chip, boasting an unprecedented four trillion transistors, set to power the formidable 8-exaFLOP Condor Galaxy 3 supercomputer. This monumental achievement marks the birth of the world’s largest computer chip, designed to orchestrate the operations of a massively potent artificial intelligence (AI) behemoth.

The cutting-edge Wafer Scale Engine 3 (WSE-3) stands as the third evolution of Cerebras’ pioneering platform, tailored to drive AI systems, including OpenAI’s GPT-4 and Anthropic’s Claude 3 Opus.

Crafted within an expansive silicon wafer measuring 8.5 by 8.5 inches (21.5 by 21.5 centimeters), akin to its predecessor, the WSE-2 of 2021, this new chip boasts a staggering 900,000 AI cores. Remarkably, while maintaining the power consumption of its forerunner, it delivers double the computational prowess, marking a significant stride in line with Moore’s Law.

In a striking comparison, the formidable Nvidia H200 graphics processing unit (GPU), a stalwart in AI model training, pales in comparison, sporting a mere 80 billion transistors — a fraction of Cerebras’ colossal offering.

Anticipated to be the cornerstone of the forthcoming Condor Galaxy 3 supercomputer, slated for deployment in Dallas, Texas, this powerhouse chip is poised to drive a paradigm shift in computational capabilities. Comprising 64 Cerebras CS-3 AI system modules, each fueled by the WSE-3 chip, this supercomputer promises an impressive 8 exaFLOPs of computational might.

Furthermore, upon integration with the existing Condor Galaxy 1 and Condor Galaxy 2 systems, the collective network is set to soar to unprecedented heights, boasting a staggering total of 16 exaFLOPs.

For context, the current apex of computational prowess lies with Oak Ridge National Laboratory’s Frontier supercomputer, which registers approximately 1 exaFLOP of computational prowess.

The Condor Galaxy 3 supercomputer, a harbinger of revolutionary AI advancements, is slated to nurture the training of AI systems dwarfing even the likes of GPT-4 and Google’s Gemini. Envisioned to handle AI models with an astounding 24 trillion parameters, dwarfing GPT-4’s speculated 1.76 trillion, this supercomputer epitomizes the vanguard of AI innovation.